from fastai_datasets.all import *Siamese Neural Network

An architecture for comparing pairs of inputs

DistanceSiamese

DistanceSiamese (backbone:fastai.torch_core.Module, distance_metric=<function normalized_squared_euclidean_distance>)

Outputs the distance between two inputs in feature space

| Type | Default | Details | |

|---|---|---|---|

| backbone | Module | embeds inputs in a feature space | |

| distance_metric | function | normalized_squared_euclidean_distance |

ContrastiveLoss

ContrastiveLoss (margin:float=1.0, size_average=None, reduce=None, reduction:str='mean')

Same as loss_cls, but flattens input and target.

normalized_squared_euclidean_distance

normalized_squared_euclidean_distance (x1, x2)

Squared Euclidean distance over normalized vectors: \[\left\| \frac{x_1}{\|x_1\|}-\frac{x_2}{\|x_2\|} \right\|^2 \]

DistanceSiamese.plot_distance_histogram

DistanceSiamese.plot_distance_histogram (pairs_dls:Union[fastai.data.core .TfmdDL,Dict[str,fastai.data.cor e.TfmdDL]], label='Distance')

Plots a histogram of intra-class and inter-class distances

classifier = resnet34(weights=ResNet34_Weights.DEFAULT)

siamese = DistanceSiamese(create_body(model=classifier, cut=-1))pairs = Pairs(Imagenette(160), .1)

dls = pairs.dls(after_item=Resize(128),

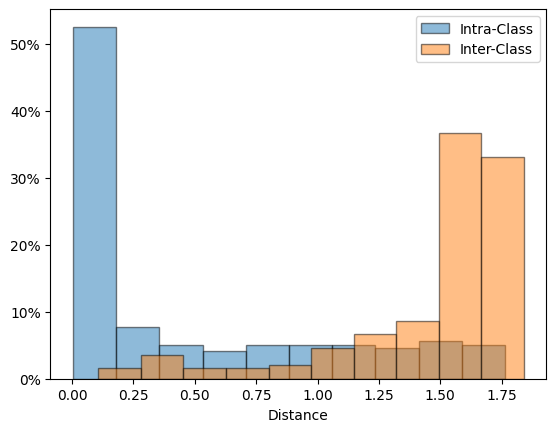

after_batch=Normalize.from_stats(*imagenet_stats))When starting with a decent backbone, positive pairs are closer than negative pairs:

siamese.plot_distance_histogram(dls.valid)

Train with contrastive loss:

learn = Learner(dls, siamese, ContrastiveLoss(margin=1.5))

learn.fit_one_cycle(3)| epoch | train_loss | valid_loss | time |

|---|---|---|---|

| 0 | 0.431103 | 0.475045 | 00:12 |

| 1 | 0.325288 | 0.382971 | 00:16 |

| 2 | 0.272254 | 0.308985 | 00:17 |

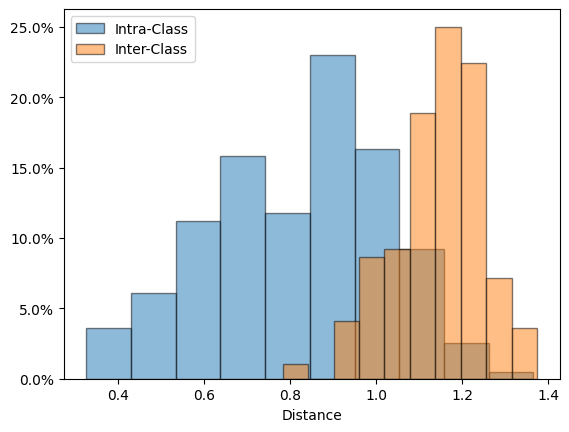

siamese.plot_distance_histogram(dls.valid)